We use our own and third-party cookies to provide a smooth and secure website. Some are necessary for our website to function and are set by default. Others are optional and are only set with your consent to enhance your browsing experience. You may accept all, none, or some of these optional cookies.

Artificial intelligence

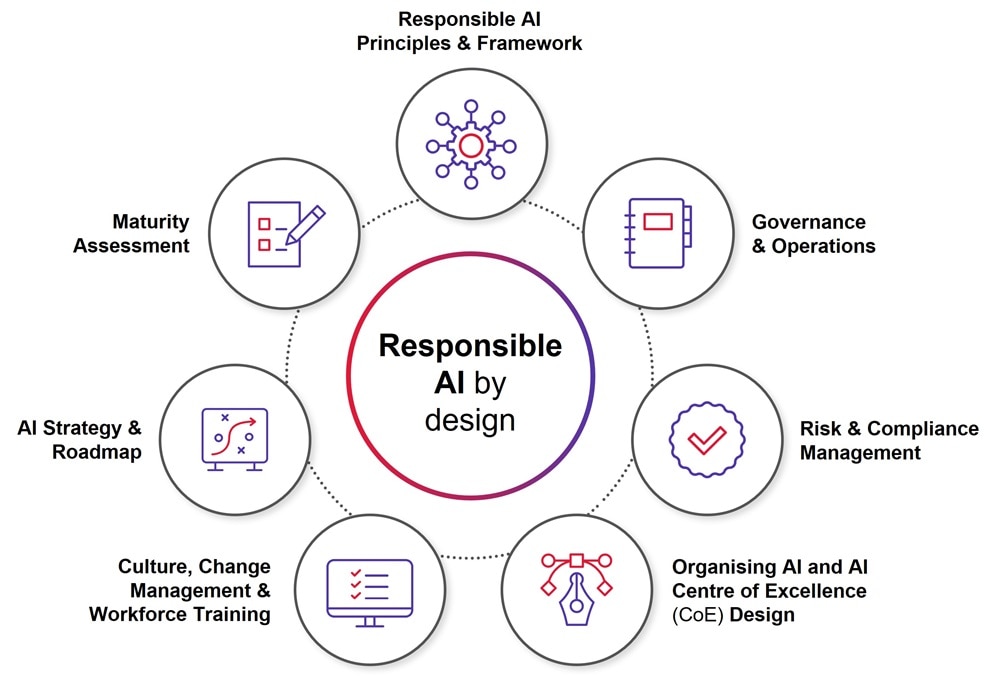

CGI is a trusted AI expert, helping clients demystify and deliver responsible AI. We combine our end-to-end capabilities in data science and machine learning capabilities with deep industry knowledge and technology engineering skills to generate new insights, experiences and business models powered by AI.

Learn more Artificial intelligence