Artificial intelligence

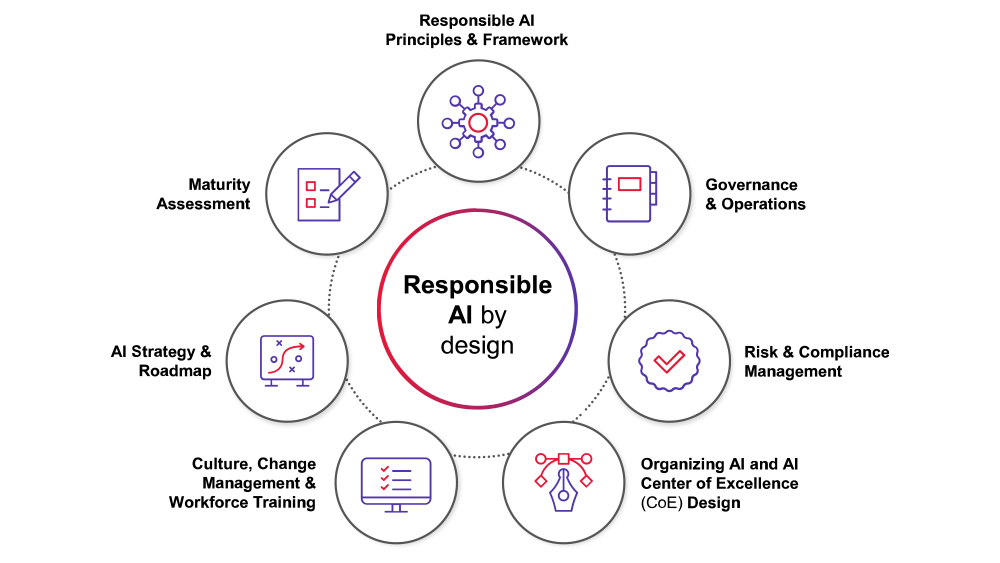

CGI is a trusted AI expert, helping clients demystify and deliver responsible AI. We combine our end-to-end capabilities in data science and machine learning capabilities with deep industry knowledge and technology engineering skills to generate new insights, experiences and business models powered by AI.

Learn more Artificial intelligence